![]()

Facial Expression Analysis and Synthesis

Overview

The Facial Expression project seeks to automatically record and analyze human facial expressions and synthesize corresponding facial animation. Analysis and synthesis of facial expression are central to the goal of responsive and empathetic human-computer interfaces. On the analysis side, the computer can respond and react to subtle sentiments reflected on the user's face. And on the synthesis end, the computer may present a comfortable and familiar visage to the end user. While the computer analysis of speech is the subject of extensive research, nonspeech facial gestures have received less attention. Natural communication in virtual settings will require the development of a computational facility with facial gestures.

Facial Expression Analysis

This project deals with the creation, control, and animation of 3D representations of human faces. The primary application of this research is 3D teleconferencing. Current state-of-the-art 2D teleconferencing applications all suffer from the inability to maintain eye contact between the conference participants. Due to constraints in network bandwidth, the participants are forced to look at small images of people's faces on a flat screen. This problem is amplified when there are multiple (more than 2) participants. We are developing technology that will enable the use of 3D representations of people's faces that allow eye contact preservation and assembling of the avatars into seamless virtual environments.

Facial Expression Cloning

Expression Cloning adapts animated facial expressions to different computer face models, allowing reuse of manually created animation with characters of different proportions. In the arena of virtual presence, expression cloning allows freedom in the choice of an avatar. This is desirable both for expressive purposes (e. g. in gaming) and when anonymity is desired (as when dealing with sales people or other strangers in virtual spaces). Expression cloning is part of a general IMSC effort towards expressive human interaction in virtual and augmented reality environments.

Human Face Modeling and Animation

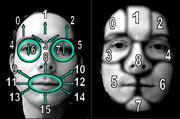

The goal is to create photo-realistic 3D meshes of human faces including geometry and texture maps. Our method is based on a pair of calibrated stereo cameras and volume morphing techniques. We take different pictures of the person, and create 3D meshes. A generic 3D model containing animation parameters is then fitted to take the shape of the new person, using volume morphing. Some point correspondences are introduced manually.

Facial Gesture Analysis: Sensing and Portrayal of Expressive Faces

Emotions (anger, happiness, sadness, etc.) are inseparable components of the natural human speech. Because of that, the level of human speech can only be achieved with the ability to synthesize emotions. We follow data- driven methods to add emotions to the computer speech. Our approach is based on “emotional” data collected for each one of the targeted emotions (anger, sadness, happiness and frustration). Collected data is segmented into smaller speech units, which later are concatenated to produce the required emotional synthetic output. Adding emotions increases the naturalness and variability of synthetic speech and brings it closer to the level of natural speech. The wide range of applications based on human- machine interaction, the need for more listenable systems for disabled people and the resent developments in the movie industry employing virtual actors are some of motivational factors for the project.